Authoured by: Ambika Sharma, Founder and Chief Strategist

Updated as on February 2026

Executive Overview

The fundamental architecture of digital demand has shifted. As of early 2026, the "Keyword Era" is officially over, replaced by an Intent-First Ecosystem driven by AI reasoning layers. For global enterprise tech firms, this means traditional search strategies are no longer just inefficient: they are invisible. This article explores the rise of LLMO (Large Language Model Optimisation), the mechanics of Query Fan-Out and how systems like NeuroRank™ serve as the cognitive moat for modern brands. The mandate for CMOs is clear: stop buying clicks and start buying "Inference Confidence" to avoid category collapse.

Featured Snippet Answers

What is Intent-First Marketing in 2026?

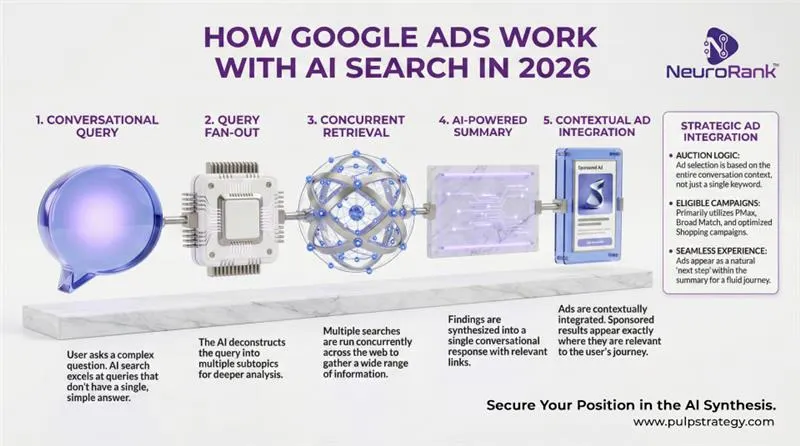

Intent-first marketing is a strategic shift where search engines prioritize the user's underlying goal over specific keywords. Using "Query Fan-Out," AI models decompose complex prompts into multiple sub-intents. Brands win by optimizing for these "need states" rather than specific search terms, ensuring they appear in the AI's reasoning path.

How does LLMO impact PPC performance?

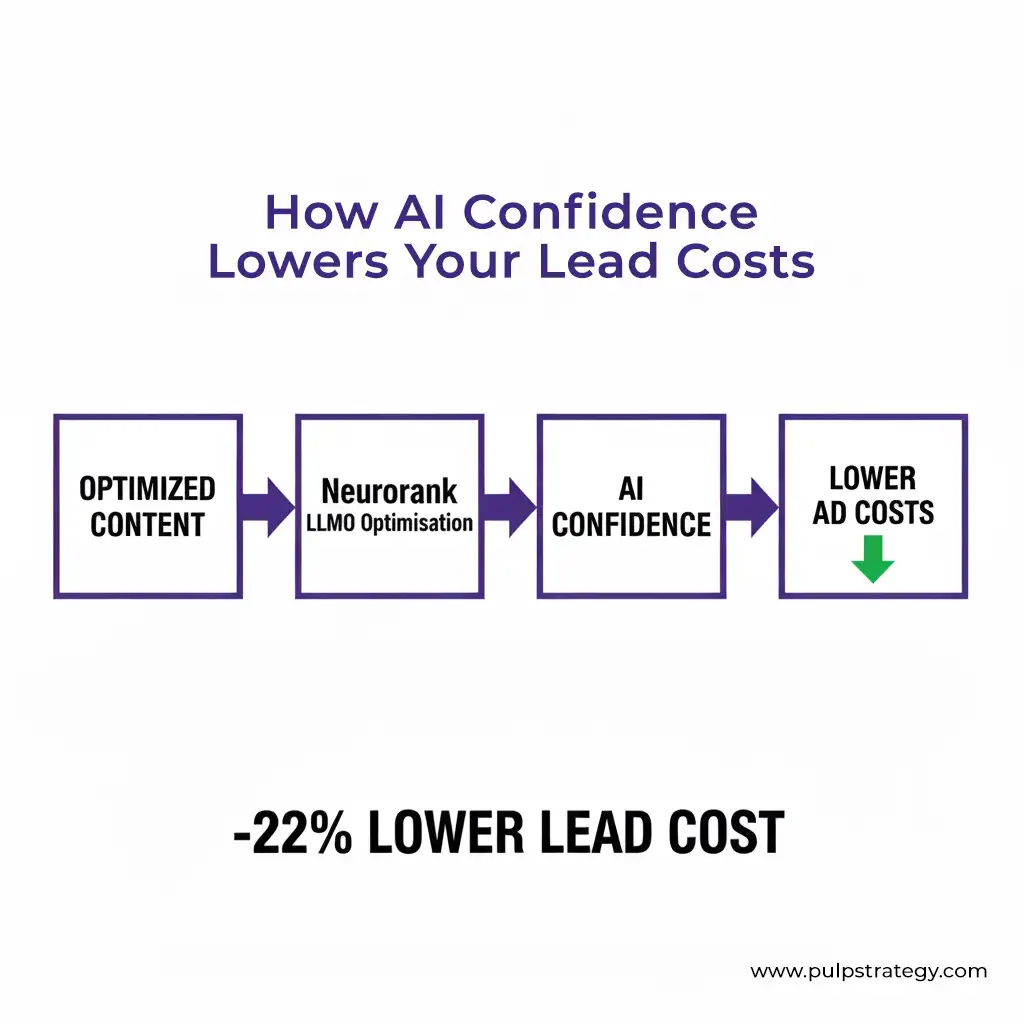

LLMO (Large Language Model Optimization) acts as a "Contribution Multiplier" for PPC. By providing semantically rich data that AI models can easily parse, brands reduce "Inference Friction." This leads to higher Quality Scores, eligibility for conversational AI Mode slots, and significantly lower CPCs compared to unoptimized competitors.

Why is NeuroRank™ essential for enterprise tech?

NeuroRank™ is a governed LLMO operating system that ensures a brand is cited and trusted by models like Gemini and ChatGPT. It prevents "Category Collapse" by mapping signals and engineering content that AI reasoning layers can validate, moving brands from simple search results to proactive AI recommendations.

The Highlights

- How the Mechanics Under the Hood Have Changed

- The Reality of the Intent Auction

- Why LLMO Is the New Cognitive Moat

- The Stack Gap: Why SEO and Social Aren’t Enough

- NeuroRank™: The LLMO Operating System

- The Cost of Inaction: Addressing C-Suite Hesitation

- The Financial Mandate: Impact on PPC Budgets

- The Inclusion Math: Exponential Lead Generation

- Executive Action Plan: The Next 6–8 Months

How Have the Mechanics of Search Changed in 2026?

For two decades, the "lookup" model defined the internet. A user typed a keyword and a search engine matched it to a database. As of H1 2026, this model is obsolete. We have entered the era of the Reasoning Auction.

If your brand is only optimized for the keyword "revenue operations," you miss the 90% of the conversation happening in the "fan-out" phase. To stay relevant, enterprise leaders must move from keyword silos to Intent Architectures.

What is the Reality of the Intent Auction?

The "Intent Auction" is fundamentally different from the bidding wars of the past. In this new landscape, Google’s AI infers commercial needs from purely informational prompts.

Consider a user troubleshooting a complex cloud latency issue. They aren't "shopping." However, the AI reasoning layer detects a problem that specific enterprise solutions can solve. It serves ads for managed service providers alongside the technical explanation. While the user didn't ask for a vendor, the AI knew they would need one.

As of 2026, if your campaign structure assumes people search in isolated, transactional moments, you are missing the journey entirely. You aren't just competing against other brands; you are competing for a slot in the AI’s logical conclusion.

Why LLMO is the New Cognitive Moat

Traditional SEO was about being "found." LLMO (Large Language Model Optimization) is about being "understood" and "trusted" by the models that now act as the internet's gatekeepers.

When a CMO asks Gemini, "Which ERP is best for a multi-national logistics firm?", the AI doesn't scroll through Page 1. It accesses its internal "mental model" of the industry. Brands that have not optimized for LLM consumption suffer from Category Collapse, which is when the AI simply acts as if they do not exist because it lacks "Inference Confidence" in their data.

The "Stack Gap": Why SEO, PPC, and Social Aren't Enough

A common question from the C-suite is: "If I am already investing in SEO, PPC and Social Media, why do I need a separate budget for LLMO? Can’t my SEO team just handle this?"

This is the "Stack Gap" fallacy.

- SEO is for Crawlers: LLMO is for Cognition: Standard SEO teams focus on keyword density, backlinks and Core Web Vitals to satisfy a crawler. LLMO focuses on Semantic Reasoning. A bot can index your page, but an LLM must "comprehend" your value proposition to recommend it in a dialogue.

- The In-House Bottleneck: Most in-house SEO teams are equipped with legacy tools designed for link-based ranking. LLMO requires NeuroRank™ Semantic Engineering, a different skill set that involves training models, not just ranking pages.

- Social is Ephemeral: AI is Persistent: Social media drives short-term spikes. LLMO builds the Permanent Neural Memory of the models. If your brand isn't in the training set or the RAG (Retrieval-Augmented Generation) pipeline, your viral social post won't save you from being omitted in an AI recommendation.

- The Intent Blindspot: Traditional teams work in silos. NeuroRank™ bridges the silos, ensuring that the "why" found in your social engagement is translated into the "signals" that feed your PPC auction and AI summaries.

Asking an SEO team to do LLMO is like asking a print mechanic to build a jet engine: the physics have changed. You need a governed system like NeuroRank™ to translate brand authority into Model Trust.

NeuroRank™: The LLMO Operating System

At Pulp Strategy, we recognized that "doing LLMO" isn't a task: it’s a governed system. This led to the development of NeuroRank™.

NeuroRank™ is not a tool; it is an orchestration layer that ensures your brand’s semantic DNA is woven into the training and retrieval sets of major LLMs (Gemini, ChatGPT, Claude). It operates on three core pillars:

- Signal Mapping: Diagnosing where AI models have "blind spots" regarding your brand's unique value proposition.

- Semantic Engineering: Re-architecting your enterprise content, including multimodal assets like YouTube demos and technical whitepapers, so it is "parse-ready" for AI reasoning.

Source Conditioning: Strategically seeding brand trust across the third-party platforms that AI models use for verification, such as industry-specific forums and specialized repositories.

The NeuroRank™ Implementation Framework: 30-Day Sprint to Presence

To secure a seat in the reasoning layer, enterprise firms cannot wait for quarterly cycles. NeuroRank™ deploys a high-velocity framework:

- Inference Gap Audit (Days 1-7): We query all major LLMs to identify "Knowledge Hallucinations" or omissions regarding your category dominance.

- Semantic Injection (Days 8-21): We overhaul your data feeds and schema using high-density semantic tags that allow AI to cite your brand without ambiguity.

- Trust Verification (Days 22-30): We programmatically distribute validation signals to external high-authority domains, "forcing" the AI to update its confidence score for your brand.

The Cost of Inaction: Addressing C-Suite Hesitation

For the CEO or CMO currently "refusing" to invest in LLMO, the hesitation usually stems from a misunderstanding of Neural Real Estate. In the keyword era, you could buy your way back into the market at any time. In the intent era, AI models are "trained" on historical consistency and trust.

- The Squatter's Problem: If your competitors are the ones providing the "reasoning" that models use to answer category questions today, the AI effectively "squats" on that knowledge. Dislodging a competitor from the AI's primary citation set in six months will cost 5x more than establishing your own presence today.

- The Reputation Risk of Silence: "Wait and see" is effectively a strategy of silence. When an AI doesn't find authoritative, LLMO-optimized data from your brand, it fills the gap with third-party interpretations or competitor comparisons.

- De-risking with NeuroRank™: NeuroRank™ provides what no other LLMO system can: Absolute Visibility. It allows you to see exactly how LLM models perceive, categorize and recommend your brand in real-time. We diagnose the Sentiment, identify the Knowledge Gaps and pinpoint the origin of hallucinations. Most importantly, NeuroRank™ doesn't just suggest a fix; it Validates it via a proprietary Conditioning Loop, ensuring your brand remains a high-confidence recommendation.

The Financial Mandate: How This Impacts Your PPC Budget

The most common question we hear from CFOs is: "If the AI is doing the work, why do I still need a PPC budget?"

The Learning Tax & Budget Barriers: New campaigns in 2026 face a "Scissors Gap." AI-powered systems like AI Max need a minimum of 30 conversions in 30 days to scale. If your budget is too low to hit this threshold, the algorithm never "primes," and your CPCs remain high indefinitely.

The Creative-to-Spend Ratio: We are seeing a massive shift where budget is diverted from "bid management" to asset production and feed optimization. The AI requires rich metadata and multiple high-quality images to match the diverse sub-queries generated by Query Fan-Out.

Adjusting Success Metrics: Success must be redefined to measure how these conversational interactions prime the user for downstream conversion.

The Inclusion Math: Exponential Lead Generation

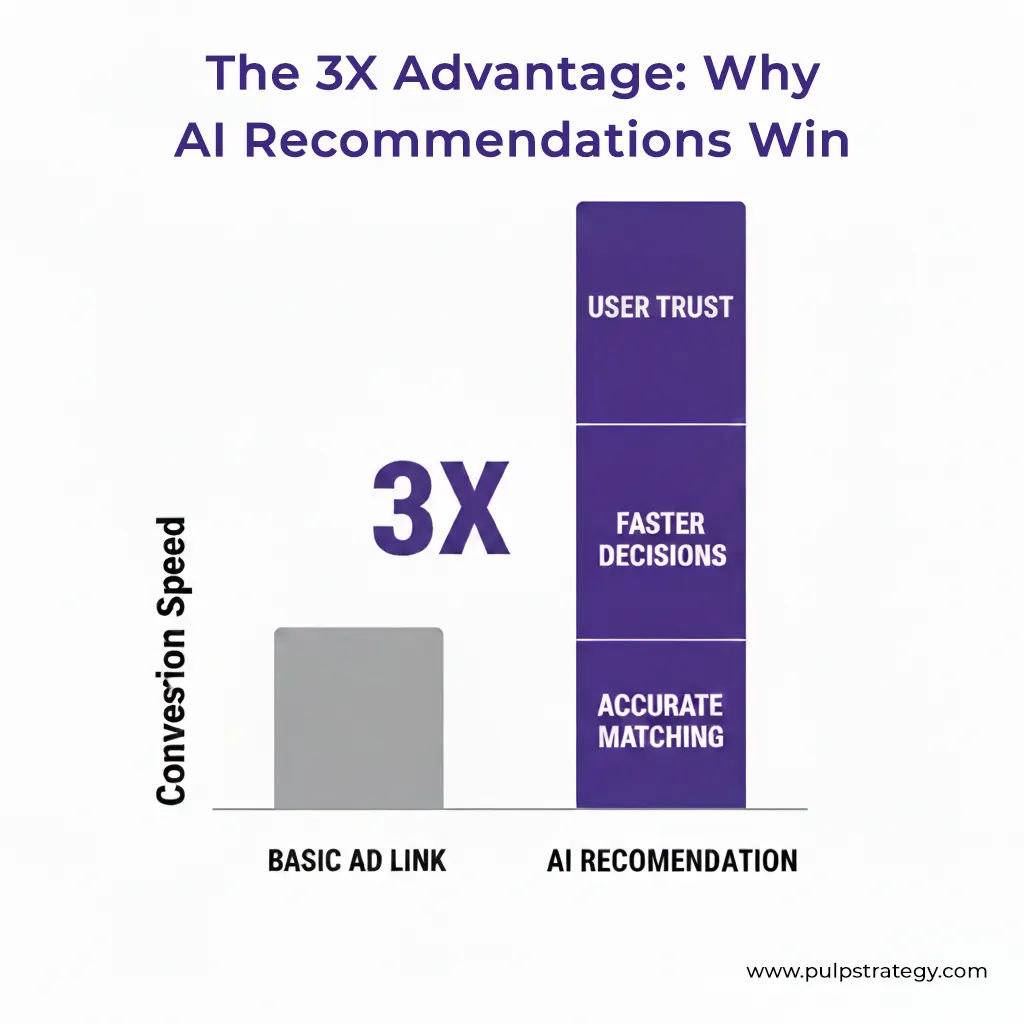

The difference between being a "link" and being a "recommendation" is a 3x multiplier on conversion. This is the Inclusion Math.

Case Study Proof: In a recent "BFSI Brand LLMO Optimization" execution for a Tier-1 client, implementing NeuroRank™ alongside AI Max for Search resulted in a 22% reduction in Cost-per-MQL within 30 days. While this rapid ROI reflects a significant "first mover" advantage, we anticipate that this will eventually stabilize to a 30 to 60 day window depending on brand maturity. More importantly, "Prompt Inclusion" rose by 410%.

Executive Action Plan: 2026 Strategic Roadmap

The window for gaining a "First-Mover" advantage in the reasoning layer is closing. Here is your mandate for the current year:

Q2 2026: Conversational Asset Pilot. Transition your creative team to build "Dialogue-Ready" assets. These aren't just display ads; they are interactive modules that answer follow-up questions in AI Mode using your verified brand data.

Q3 2026: Contribution Scoring Adoption. Move away from Last-Click or Data-Driven Attribution. Adopt "Contribution Scoring," which uses the AI reasoning layer to measure how high-funnel conversational interactions influence the final sale, even without a direct click.

Q4 2026: Deep Link Reasoning and Verification. Prepare for Google’s "Verification Badges." Audit and overhaul landing pages to pass the AI's "Deep Reasoning" check, ensuring eligibility for the most prominent and trusted AI Mode placements.

Key Takeaways for the C-Suite

- Keywords are seeds, not targets. Use them to guide the AI, not to restrict it.

- LLMO is non-negotiable. If the AI doesn't "understand" your brand context, you are invisible.

- PPC is for Data Priming. Use your budget to teach the AI who your high-value customers are via Customer Match and first-party data.

- NeuroRank™ is the bridge. It moves you from the "Search Index" to the "Reasoning Layer" faster and with a planned strategy roadmap.

Is your brand ready for the Intent Era? Stop chasing the words your customers say. Start mastering the goals they have. Contact Pulp Strategy today for a NeuroRank™ Readiness Audit and reclaim your place in the conversation.

Explore NeuroRank™ Solutions | Schedule a Strategy Session