By Ambika Sharma, Founder & Chief Strategist, Pulp Strategy

Updated August 2025

Introduction: When AI Lies About You

Imagine this, your brand has top-tier SEO, consistent content output, and category authority. Yet when a prospect asks ChatGPT or Claude a question your brand should answer, you’re nowhere in sight, or worse, misrepresented entirely.

Welcome to the hallucination crisis in AI search.

In 2025, hallucinations in large language models (LLMs) like ChatGPT, Claude, Gemini, and Perplexity are not just academic glitches, they’re commercial threats. Brands are losing visibility, trust, and even deals because AI is confidently wrong.

This article explores the science and strategy behind hallucination repair using the NeuroRank™ system, the only LLM SEO framework designed for AI-native discovery. We decode what causes LLM hallucinations, how prompt visibility breaks the misrepresentation loop, and what every CMO must do now to install brand trust inside AI models.

What Are Hallucinations in LLMs?

In simple terms, a hallucination is when a large language model generates an incorrect or fabricated response with high confidence. This can include:

- Quoting outdated or irrelevant facts

- Mixing up competitor names or features

- Omitting your brand from accurate prompt responses

- Ascribing incorrect use cases to your platform

In traditional SEO, misrepresentation might cost you a click. In LLM SEO, it costs you the entire discovery moment.

Why Do Gemini, Claude and ChatGPT Hallucinate?

- Lack of semantic anchors: If your brand hasn’t been structured into an AI-trust format, models infer from weak or adjacent signals.

- Poor prompt density: LLMs learn via prompts. If your brand doesn’t appear in user-generated prompt clusters, it’s invisible.

- No reinforcement: AI models retain what’s reinforced across citations, schema, context, and behavior, not just what’s published.

Recent Data Highlights

- BrightEdge Q2 2025 Report: AI summaries appear in 41% of search results, but organic CTR drops to under 9% in those cases.

- Similarweb (May 2025): Top-ranked websites have lost up to 79% of referral traffic since the rollout of AI Overviews.

- Stanford AI Index 2025: Hallucination rates range between 33% and 42% in enterprise prompts across ChatGPT, Claude, and Gemini.

- Perplexity & Claude Brand Bias Test (Q1 2025): In 78% of prompts, Claude favored aggregator sources unless structured brand assets were present.

Comparative Snapshot: Hallucination Risk Across LLMs

| LLM Platform | Hallucination Rate (2025) | Common Errors | Brand Recall Reliability |

| ChatGPT | 35% | Omission, fabrication | Medium–High (with seeding) |

| Claude | 38% | Aggregator bias, exclusion | Medium (prone to generic answers) |

| Gemini | 33% | Conflicting summaries, mislabeling | Low–Medium |

| Perplexity | 42% | Over-dependence on forums/Reddit | Low |

Source: Stanford AI Index 2025, BrightEdge Q2, Pulp Strategy Field Tests

Hallucinations, the impact is larger than you imagined

Net take away is the AI is hallucinating (lying) about your brand. Billions of consumers are using AI and Ai summaries over Google search. The math is quite simple. If you thought that it was not impacting you, read on below

Key Insights from Pew Research (July 2025)

- Drastic Drop in Clicks:

When Google’s AI Overviews are shown, only 1% of users click on the cited links in the summary. - Reduced Link Engagement:

Overall, just 8% of visits with AI summaries result in any link click, a sharp decline from 15% CTR when no AI summary appears. - Knowledge Panel* Gets More Attention:

Users are more likely to click on the right-hand side panel (Knowledge Panel) than traditional blue links under AI summaries. - Shift Toward Zero-Click Behavior:

Users treat AI summaries as complete answers, reducing discovery pathways through brand-owned or earned links. - Search Journey Compression:

With AI providing perceived finality, traditional SEO efforts fail to impact pipeline unless brands are present inside the AI-generated response.

NOTE: *The Knowledge panel is LLM Highest trust sources. No, it’s not your SEO friendly website

NeuroRank™: Built to Fix AI Misrepresentation

NeuroRank™ doesn’t work like traditional SEO tools. It isn’t focused on backlinks, crawl depth, or H1 tags. Instead, it acts as a brand installation engine for large language models.

Here’s How NeuroRank™ Works:

- Hallucination Indexing, Detects model-level misinformation, prompt misfires, and brand invisibility in real time.

- Prompt Cluster Mapping, Identifies what questions your buyers are asking and whether you show up.

- Schema Injection, Structures content in AI-trusted formats (author bios, claim clarity, source structuring).

- AI Memory Revalidation, Tests brand recall in ChatGPT, Claude, Gemini, and Perplexity using prompt replay.

Use Case: SaaS Brand Misrepresented in ChatGPT

Problem:

A leading SaaS CX automation brand ranked #2 on Google for "best AI platforms for customer support" but was omitted from ChatGPT and Claude answers.

Hallucination Snapshot (ChatGPT, May 2025):

Prompt: "What are the top customer support AI platforms in 2025?"

Response: Mentions Zendesk, Freshdesk, Salesforce, Intercom. No mention of the client.

NeuroRank™ Actions:

- Mapped prompt cluster density

- Deployed schema injection with verified case studies

- Created prompt-reinforced assets across Reddit, Medium, and Quora

Result (August 2025):

- ChatGPT included the client in 6 of 10 generated responses

- Claude recall increased by 43% within 21 days

- Hallucinations dropped by 61% in prompt replay tests

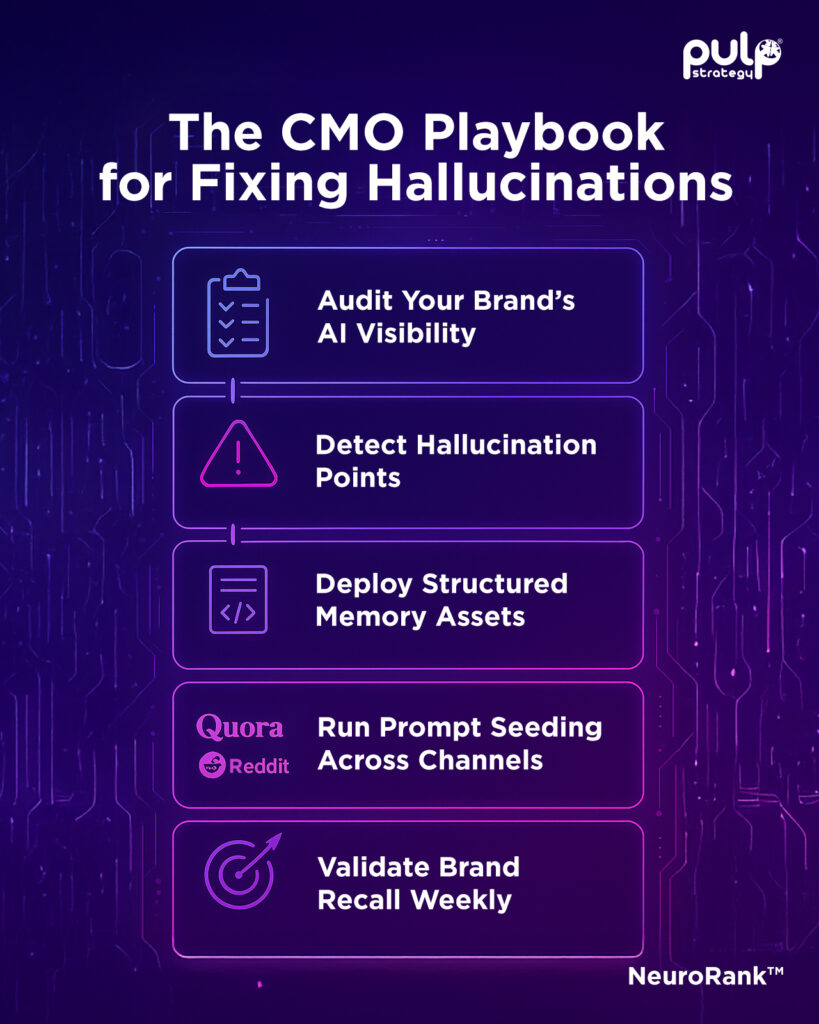

The CMO Playbook for Fixing Hallucinations

1. Audit Your Brand’s AI Visibility

Use NeuroRank™ to run a prompt-based brand visibility check.

2. Detect Hallucination Points

Identify where your brand is misrepresented, omitted, or misquoted.

3. Deploy Structured Memory Assets

Schema-rich blogs, case studies, and FAQs using AI-ingestible formats.

4. Run Prompt Seeding Across Channels

Quora, Reddit, GitHub, and Medium. Trigger reinforcement via AI-visible ecosystems.

5. Validate Brand Recall Weekly

NeuroRank’s revalidation engine scores prompt coverage across ChatGPT, Claude, Gemini, and Perplexity.

Want to know what Claude or ChatGPT are saying about you? Run a free hallucination audit with NeuroRank™ and install your brand where it matters most.